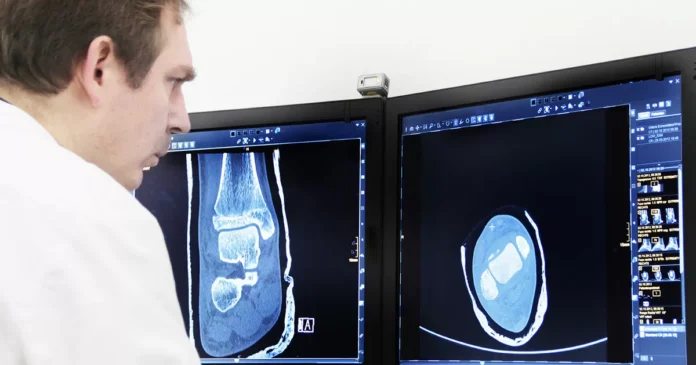

Grok artificial intelligence has been making waves in the medical field with its promise of accurate analysis of medical images. Developed as a subscription-based service, Grok AI boasts of its ability to diagnose medical conditions with precision and efficiency. However, physicians and researchers have expressed doubts about the model’s capabilities, and privacy experts have raised concerns about the privacy of patient data.

The use of artificial intelligence in the medical field is not new, but its potential has been gaining attention in recent years. With the increasing use of technology in healthcare, AI has been seen as a solution to improve patient outcomes and streamline processes. Grok AI, in particular, has caught the attention of many with its promise to revolutionize medical image analysis.

According to the developers of Grok AI, the model uses deep learning algorithms to analyze medical images and provide accurate diagnoses. They claim that the model has been trained on a vast amount of data, making it capable of detecting a wide range of medical conditions. The model is also said to be continuously learning, making it more accurate with each use.

However, physicians and researchers are not entirely convinced. While they acknowledge the potential of AI in healthcare, they believe that Grok AI’s abilities are limited. The model may be useful for detecting common medical conditions, but it may not be as effective in diagnosing rare or complex diseases. This is because the model’s training data may not cover all possible variations of medical conditions, making it less reliable in certain cases.

Furthermore, some experts have also raised concerns about the lack of transparency in Grok AI’s algorithm. The model’s developers have not disclosed the specific data sets used for training, making it difficult for physicians and researchers to understand how the model arrives at its diagnoses. This lack of transparency can be a significant hurdle in gaining the trust of the medical community.

Privacy is another area of concern when it comes to Grok AI. As the model relies on vast amounts of medical data to train and improve its accuracy, patient information is crucial. However, there are concerns about the security and confidentiality of this data. In today’s world, where data breaches are prevalent, ensuring the privacy of patient data must be a top priority. The developers of Grok AI need to address these concerns and provide robust security measures to protect patient information.

Despite these concerns, there is no denying that Grok AI has the potential to make a significant impact in the medical field. Its ability to analyze medical images quickly and accurately can save time, reduce human error, and ultimately improve patient outcomes. However, for it to be truly effective, the model needs to address the limitations highlighted by physicians and researchers.

Moreover, the developers of Grok AI need to work closely with the medical community to understand their needs better. Physicians and researchers can provide valuable insights and feedback on the model’s accuracy and usability, which can help in refining and improving it. A collaborative approach is essential to ensure that Grok AI is an effective tool in the medical field.

In conclusion, Grok artificial intelligence has shown promise in revolutionizing medical image analysis. However, its abilities are limited, and concerns about privacy and transparency need to be addressed. With collaboration between the developers and the medical community, Grok AI can fulfill its potential and become a valuable tool in the fight against diseases. As technology continues to advance, it is crucial to balance its potential with ethical and privacy considerations. With the right approach, Grok AI can be a game-changer in the field of healthcare.